Technology We Wouldn't Have Without World War II

The world changed immeasurably during World War II, starting with the casualties. No one's really sure just how many people died, but The National WWII Museum estimates that number to be somewhere around 15 million dead in battle, another 25 million wounded, and 45 million civilian deaths... although they also note that it's possible that China alone lost more than 50 million civilians.

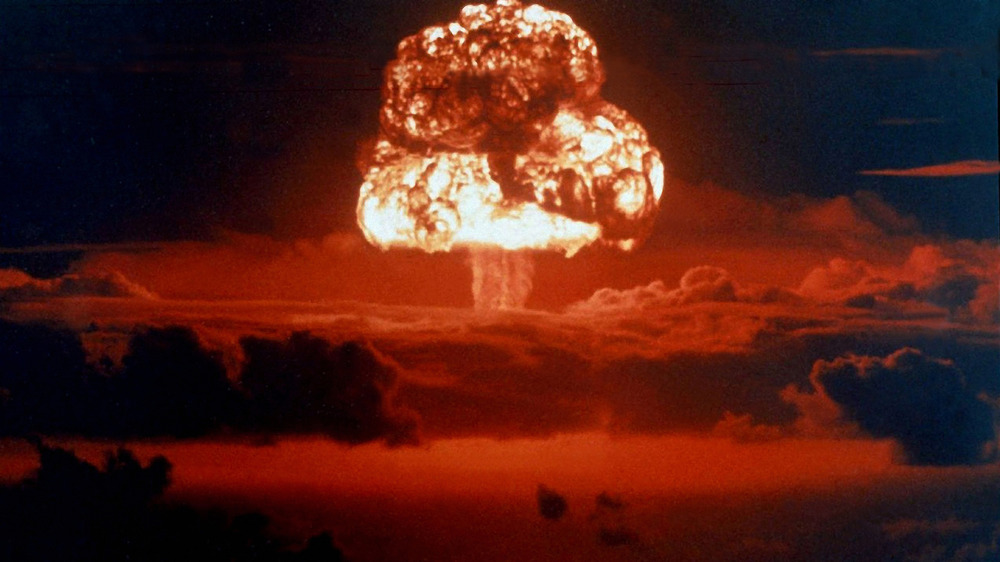

The biggest technological advancement to come out of the war is the one that most are familiar with: the atomic bomb. Research into the development of the bomb started in 1938, per the Atomic Heritage Foundation, with the discovery of nuclear fission. The Manhattan Project was formed in 1942, and within three years, the Allies were looking at the potential — and consequences — of what they'd created.

The atomic bomb isn't the only technological advancement to come out of the war, though. Necessity is the mother of invention, after all, and it turns out that there are a number of inventions that got their start during the war years that we use on a regular basis. There are also some other horrible, terrible, no-good things that we got, too.

Wartime technology revolutionized aviation

The battles of World War II weren't just fought on land or at sea, now, they were being fought in the air, too. Simple Flying says that the advancements in aviation technology we saw during the war were pretty shocking: biplanes gave way to monoplanes, aircraft like the Spitfire made things like retractable landing gear and landing flaps the norm, and things got a lot faster and more aerodynamic. But let's talk about one advancement, in particular, one that made a huge difference in getting everyone else up into the skies: the pressurized cabin.

According to Air & Space Magazine, it wasn't until 1937 that the US Army Corps started developing planes with pressurized cabins. Their prototype was a Lockheed Electra, and they were getting the hang of using things like strengthened windows and redesigned cross-section that would account for the stress pushing outward.

Fast forward a few years, and you have Boeing developing the first aircraft built with a pressurized cabin right from the start: the B-29 Superfortress. (That should sound familiar — the Enola Gay and the Bockscar, which dropped the atomic bomb, were B-29s.) Shortly after, their 307 Stratoliner took to the skies with passengers riding in a pressurized cabin, and while the inside of airlines has changed a lot in the decades since, the basic technology is pretty much the same.

Sometimes, the simplest things have the biggest impact

By the time World War II rolled around, combat had gotten increasingly mechanized. Mechanization means one universal thing: a need for fuel.

Up until the war, most gas cans were terrible, inconvenient things that had a tendency to leak along weld lines. They also required the use of a funnel when you were filling them, a wrench to tighten and loosen the cap, and a spout to make sure whatever fuel was still in the can made it into the tank it was supposed to. Add in the chaos of combat — and the fact that many could only be used once — and it's a nightmare.

So, the Germans invented the Wehrmacht-Einheitskanister, which became more widely known as the Jerrycan. According to Carryology, it was a total win: it could hold more fuel, had a single weld line and plastic insert that prevented leaks, expanded and contracted with the temperature, had a lid mechanism that wasn't removable and wouldn't get lost, and they made it in different colors so soldiers could tell at a glance what kind of fuel was inside. There were also three handles, which was surprisingly important — it meant that cans could be passed down a line of men incredibly easily.

It wasn't long before the Allies got some and reverse engineered it for their own use. Decades later, they remain the go-to can for transporting fuel for groups like NATO and the United Nations.

One of medicine's greatest achievements was used during World War II

Penicillin was the discovery that brought about "the dawn of the antibiotic age," the American Chemical Society says. It was actually discovered by Alexander Fleming in 1928 — long before the war — and here's the thing: when Fleming first published his findings on how his so-called "mold juice" was able to kill various types of bacteria, he didn't immediately talk about what this might mean for medicine. It wasn't until 1941 that a police officer named Albert Alexander was given the first dose, and it cleared up what would have otherwise been a life-threatening infection ... until supplies ran out, the infection returned, and he died.

It was immediately understood that penicillin was going to have a major impact on the health of countless people, both on the battlefield and at home. The US started talking about how to begin the manufacture of penicillin immediately, and thanks in large part to Pfizer's adaptation of a fermentation process they'd perfected for another chemical — citric acid — the antibiotic started making it to the front lines.

Albert Elder was put in charge of coordinating the production of 21 companies, writing, "You are urged to impress upon every worker in your plant that penicillin produced today will be saving the life of someone in a few days or curing the disease of someone now incapacitated." Demand increased, supply increased, and it changed the way we treat infection.

No, carrots don't actually help you see in the dark

Everyone's heard the story: carrots are good for your eyes, and they help you see in the dark. Sorry, but according to the Smithsonian, it's a myth that was started to hide the fact that British pilots had another kind of help seeing in the dark: radar. But radar wasn't a WWII invention, not precisely. So why are we talking about it?

Radar was invented in the 1930s, and according to Imperial War Museums, early warning radar stations had already been built all along the British coasts. They could spot an enemy approach at 80 miles, but the stations themselves were huge, with masts that were about 330 feet tall.

In 1940, physicists at the University of Birmingham started working on a way to make radar units smaller. The end result was the development of the cavity magnetron, which allowed for radar that operated in the microwave range, could produce higher resolution images, and could be installed in aircraft.

Once it was complete, the British took it to the US and Canada. According to IEEE, the US National Defense Research Committee ran with it, and with the creation of MIT's Radiation Laboratory came a series of different types of radar. But it was the cavity magnetron that most people have in their homes right now: it was the technology that allowed for the construction of the microwave.

The first helicopter was used during World War II

Helicopters have a distinct advantage over planes: because of their vertical lift-off capabilities, there's no runway needed. Take a look at the jungles of the Pacific Front and you'll see at a glance just how valuable a craft that can land and take off without a runway could be.

And it was. According to Air & Space Magazine, 1943 saw a new kind of combat unit: they were the Air Commandos, pilots tasked with dropping supplies and troops well behind enemy lines, deep in the jungles of Burma. It was even more difficult than it sounds, as the craft they needed to get from US Army Air Force officials at Wright field was the Sikorsky YR-4s.

According to History, it was the R-4 that was the first helicopter in mass production. Developed by Igor Sikorsky, originally the idea was that it was going to be able to easily take off from a ship's deck. A few modifications later and it became the first to fly in combat.

Getting them to that point took a lot of convincing, and no little amount of pure gumption. Let's put it this way: the rotor blades were wood pieces laminated together. It wasn't long before the military saw the value in this highly maneuverable craft — particularly when it came to spotting wreckages in the jungle and staging rescue missions.

Melts in your mouth, not in your hand!

Everyone's favorite little chocolate candies might not seem like much of a technological advancement, but they were — and they played an important role in World War II.

The seed for the idea was planted in 1932. That, per History, is when Forrest Mars Sr. left the US for the UK. While he was there, he opened another branch of Mars and started making candy bars for the military. There, he saw people eating little chocolate balls that had been coated in a sugar shell.

The idea was that the shell kept the chocolate from melting, and when he headed back to the US, he approached the Hershey-affiliated Bruce Murrie. Long story short, they used Hershey's chocolate to make their new candy — candy that sported their initials, M&M.

By that time, it was 1941. When M&Ms first went into production, they were only sold to the military. There was a good reason for that: that candy-coated shell made them perfect for including in soldiers' C-rations, as even in the hot, humid climate of the Pacific, the candy wouldn't turn into a melty mess. Mars' Newark, New Jersey factory produced around 200,000 pounds of M&Ms each week, and the Huffington Post says that when soldiers returned and the war ended, M&Ms went public.

Night vision became portable because of World War II

NIght vision technology is pretty cool because it brings to life something you don't usually expect to see — and everyone likes being in on secrets. According to the Smithsonian, night vision technology went back to just before World War II, when everyone pretty much knew there was a major conflict looming on the horizon. The first units — generation zero — could amplify existing light around 1,000 times, but they were also so big they needed to be moved on flatbed trucks. So, not terribly helpful when you're trying to be stealthy.

That's when the US Army teamed up with RCA to take that technology and make it into something smaller, more portable, and more useful. Meanwhile, Night Vision Australia says that the Germans were working on it, too — and by the end of the war, they had at least 50 Panther tanks that had been outfitted with night vision. Both sides had developed similar technologies for fitting to assault rifles: the "sniperscope" was created for the Allied M1 and M3 rifles, while the German infantry were equipped with a system called the Vampir. Night vision has come a long way since then, but it's still an invaluable asset to the military and organizations like search and rescue teams.

But, how did anything get fixed before duct tape?

Duct tape can be used to fix almost anything, even people! (Yes, The Healthy says that you can definitely use it to do things like hold wounds closed, prevent a collapsed lung, and protect a scratched or punctured cornea ... if you know what you're doing.) The story behind the invention of duct tape is just as cool as it is.

It turns out that we can thank an Illinois woman named Vesta Stoudt for the genius that is duct tape. Stoudt, Johnson & Johnson explains, spent 1943 working at the Green River Ordnance Plant, and that's where she noticed something that definitely needed fixing. Boxes of ammunition that were being packed and sent to the front were sealed with paper tape then with a layer of wax to make the whole thing waterproof. But more often than not, the tab on the flimsy tape wouldn't rip the wax open, and that would leave soldiers struggling to get to their much-needed ammo.

What followed was a flash of inspiration: why not make waterproof cloth tape? When her supervisors brushed her idea aside, she sat down and wrote a letter to FDR detailing her idea, and including diagrams on how to make it. That went on to the War Production Board, and duct tape was born.

The world would look pretty different without computers

It was Alan Turing, Scientific American says, who ushered in the computer age.

While the original computers were very literally people who sat down and did computations, Turing proposed something different in a 1936 paper he wrote at Princeton. In it, he described a then-theoretical machine that could be fed information that it would use to solve problems — and basically, that's a computer.

Fortunately, Alan Turing didn't do what most of us do with his college papers, which is to promptly forget we even wrote them. When World War II rolled around and the Allies needed some way to crack Germany's Enigma code, Turning created a computer that did exactly what he'd predicted it could. It was called the Bombe, and it was programmed to search all the possible combinations and permutations that Enigma might have used to code a message and break it — much faster than Bletchley Park's 12,000 codebreakers could.

That was only part of the equation, and it ultimately led to the development of what The Telegraph calls the world's first programmable computer: Colossus. It was the brainchild of Thomas Flowers, and it was so essential to the codebreakers of WWII that it was used well into the Cold War — and proved to the world just what computers could do.

How about a shout-out to the Guinea Pig Club of World War II?

Millions of soldiers and civilians suffered unthinkable injuries throughout the war, and that led to a whole new problem: how do we improve the ways they're treated? Enter: Dr. Archibald Hector McIndoe.

At the beginning of the war, The PMFA Journal says McIndoe was one of only four qualified plastic surgeons in England, and he'd learned from the best — Sir Harold Gillies, who had made major advancements in the field of reconstructive surgery already, mostly during the work he'd done with veterans from World War I. The British government already knew that there was going to be another influx of soldiers needing the services of a reconstructive surgeon, and McIndoe was sent to the Queen Victoria Hospital, where he worked mostly with veterans of the RAF.

They quickly found they were vastly unprepared for the number and severity of injuries they were seeing. In 1941, he started the Guinea Pig Club, an informal club of recovering patients.

Each member had to have undergone two surgeries, and this was ground-breaking for two reasons: McIndoe not only pioneered techniques that are still being used today, but he also treated the soul as well as the body. The Guinea Pigs were given psychological and emotional support as well as physical care, and by the end of the war, there were 649 members of the club and many left to — successfully — find a new place in the world.

Blood banks were born during the war

The medical world learned some important things from WWI, including the fact that blood loss could be as deadly as wounds themselves. By 1940, it was clear there was going to be another massive war, and that's when Harvard biochemist Edwin Cohn got involved. It was Cohn, per the Smithsonian, who came up with the process of separating blood plasma into components that were easier to ship and store, but he wasn't the only one we need to thank for some seriously life-saving science.

The American Chemical Society reports that it was Dr. Charles Richard Drew who conducted ground-breaking research on preserving blood long-term. His doctoral research focused on things like anticoagulants, preservatives, fluid replacement, and blood chemistry, and those findings got him an appointment as the head of the Blood for Britain Project at a time when the country was enduring sustained attacks. They pioneered methods of separating and storing blood that was donated, allowing first for the mass shipment of blood supplies from New York to Britain, and then, the Red Cross program to mass-produce dried plasma.

He also developed bloodmobiles — the mobile collection centers still in use today. He's known as the father of the blood bank and his work has saved countless lives, but it's also worth noting that because of systemic racism and segregation policies, he was forbidden from giving blood himself under laws he called "unscientific and insulting to African Americans."

Not all inventions from World War II are for the greater good

Chemical weapons are terrifying, and there's a good chance that the nerve gas sarin might sound familiar. According to the CDC, sarin was used in two terrorist attacks on Japanese soil in 1994 and 1995, and it has a terrible list of effects that include paralysis, convulsions, and the risk of respiratory failure and death.

Sarin, Chemical & Engineering News explains, was discovered by accident by a Third Reich scientist named Gerhard Schrader. Schrader was working for a division of IG Farben that had been tasked with finding new insecticides that would reduce Germany's reliance on outside crops and instead, he discovered sarin nerve gas. IG Farben jumped on it and went right to the military.

Germany approved the construction of a facility dedicated to the production of sarin in 1943, headed by 1938 Nobel Prize winner and dedicated Nazi Richard Kuhn. Still, when it came time to use it, Hitler refused. Just why is still an area of speculation: some suggest that he didn't want to use it because he'd experienced mustard gas first hand during WWI, while others suggest it was simply a matter of not wanting to contaminate areas that would soon be occupied by German troops.

The German chemical weapons factories were disassembled, but it kicked off a race to stockpile chemical weapons, and 50 years later, the Chemical Weapons Convention was convened to try to stop that precise thing.