What Does An IQ Test Actually Measure?

If you're like many people, you might fall into one of two camps when it comes to IQ tests: 1) "Who cares? It's a load of bull," or, 2) "Hey, look at how high my score is! Neener, neener." At the very least, some folks might have taken an IQ test at some point in their lives, perhaps as a child. You might know that a score of 100 is considered "average" (more on that definition later). You might have read that such-and-such a person like genius physicist Albert Einstein had an IQ of 100-and-something-something-very-high. Or, you might have gotten curious on a bored afternoon one day and took one of those horrible, completely unscientific, 10-minute online IQ tests. But in the end, what does "IQ" really mean, and what does it really indicate?

In short, "IQ" stands for "intelligence quotient," where a quotient results from multiplication. IQ tests ask a bunch of questions, your answers get tallied and divided by your physical age to produce your "mental age." That answer gets multiplied by 100 — that's it. And if you're thinking "mental age" sounds like a bogus measure, well, that's part of the ongoing discussion about IQ test validity and relevancy.

The most universal IQ tests come from the early to mid-20th century and are supposed to measure language skills, spatial and logical reasoning, processing speed, etc. Ultimately, as Verywellmind explains, IQ tests are comparative: individual scores only make sense when compared against a lot of other people.

The recent birth of 'intelligence'

In the present day, it might seem obvious that we'd want to test people's mental abilities to see what they might be good at, especially children. But, that's only because we have a greater degree of choice than ever before. Was such a measurement necessary in the past? If a kid was bright, it was obvious. If someone was good at something, they'd pursue and do it well — end of story. If someone was of a certain social standing, that person might pursue higher education regardless of intellect. If not, that person would be a laborer, farmer, etc., like generations prior. "Intelligence" was a meaningless metric. Depending on your stance, it still is.

As a metric, "intelligence" only came into being a bit over 100 years ago with British polymath Sir Francis Galton. As Verywellmind explains, Galton wanted to know if intellectual acuity was inherited, like eye color or height. He also envisioned gathering actual statistical data to verify his ideas, not just lobbing his opinions around. Come 1904, per the Stanford-Binet Test website, the French government originally approached psychologist Alfred Binet and his student Theodore Simon because they wanted a way to detect which children had "notably below-average levels of intelligence for their age" – hence the whole "mental age" definition underlying IQ tests. Binet, building on Galton, developed the Binet-Simon Scale that consolidated the measurement of intelligence into one number. Lewis Terman of Stanford University revised it in 1916 and dubbed it the Stanford-Binet Test.

What do IQ tests test?

Plenty of other IQ tests have hit the market since the development of the Stanford-Binet test, notably the Wechsler Adult Intelligence Scale (WAIS) developed in 1955, now in its fourth edition since 2008 (WAIS IV), as Verywellmind overviews. Each test differs from others in certain ways, focuses on different skills, varies in its calculations, and sometimes produces multiple scores instead of one number. The WAIS focuses on adults, for instance, while the Stanford-Binet was originally developed for children. Other tests like Universal Nonverbal Intelligence take disabled individuals into account, while the Peabody Individual Achievement Test is academic in nature. In other words, definitions of "IQ" vary, and we can't universally say what an IQ test actually tests aside from "intelligence," and even that definition is up for debate.

To help us understand IQ a bit better, we can look to the WAIS IV. Described in some detail on Pearson Assessments, the test contains 10 sub-tests that focus on four domains (areas) of intelligence: "verbal comprehension, perceptual reasoning, working memory, and processing speed." It contains various puzzles and tasks, including visualizing how shapes go together, identifying patterns out of a crowd, and making basic deductions about relationships between objects. The test not only checks basic cognitive functioning, but can also identify issues resulting from brain injuries, psychiatric conditions, and more, as Verywellmind explains. The test does not take creativity, inventiveness, psychological well-being, self-awareness, emotional intelligence, social skills, or anything else into account.

How do IQ tests test?

We mentioned that the original 1916 Stanford-Binet Test measured "mental age." As Thomas explains, psychologist William Stern coined the term "intelligence quotient" four years earlier in 1912 when assessing mental age. If a person taking an IQ test was 10 (chronological age) and scored as well as a 12-year-old (mental age), then that person's IQ was 12 divided by 10, multiplied by 100, or 120.

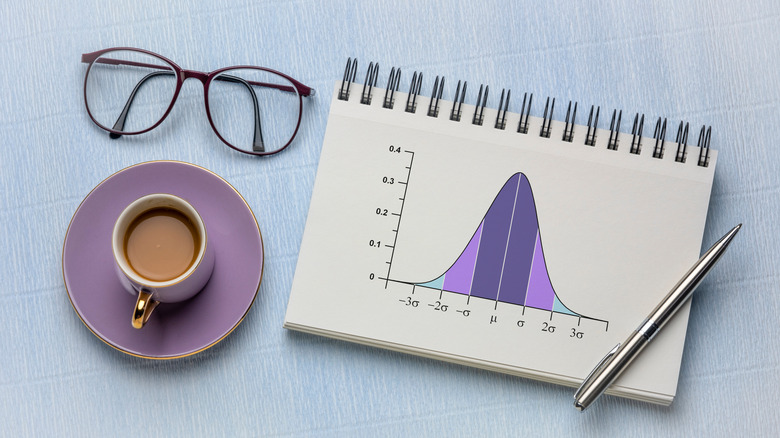

Later tests like the Wechsler Adult Intelligence Scale (WAIS) followed the same numerical grading system but ratcheted up the underlying mathematics. Care Patron breaks down how the WAIS-IV calculates its four different types of scores: raw, scaled, standard, and composite. Raw scores are the test-taker's actual answers, and composite scores are the final scores. In the end "standard deviations" (SD) are critical to the equation — calculations that determine how uncommon answers are in relationship to averages, i.e., the scattering of data away from mathematical means.

In IQ tests, 15 is the standard deviation — a threshold after which scores become more and more uncommon. 100 is the most common, with most scores falling with the bell curve bulge of 85 (1 SD below 100) to 115 (1 SD above 100). Omni Calculator says that 99.7% of the population falls between 55 and 145. This means that IQ scores not only depend on a mountain of data for patterns to emerge but are comparative — they're percentiles. People have a "high" or "low" score only in comparison to others.

Criticisms and drawbacks

IQ tests have drawn on-again, off-again ire for years. More than accusations of meaningless terms and questionable methodologies, IQ tests have come under fire for ethical reasons. Big Think, for instance, states that IQ tests have been "weaponized by racists and eugenicists," including 1927's Buck v. Bell U.S. Supreme Court case that allowed forced sterilization based on whether or not someone got a decent IQ score. But of course, this is a matter of how the test is used, not whether it accurately assesses intelligence or not.

No matter that IQ tests have proven reliable for decades, reliability is not validity. In other words, a test may produce the same results over time (be reliable), but not measure the thing it actually sets out to measure (be valid). If an IQ test isn't valid, then all it's testing is whether or not its test-takers are good at taking the test.

Intelligence, some argue, should be a matter of excelling in specific areas, not a single, standardized measurement. In 1983's "Frames of Mind," Howard Gardener outlines his Theory of Multiple Intelligences, stating that different people are clever in different ways. As Verywellmind explains, we've got linguistic-verbal intelligence, musical intelligence, logical-mathematical intelligence, interpersonal intelligence (understanding others), intrapersonal intelligence (understanding oneself), etc. Would Paul McCartney join Albert Einstein for a little afternoon math session? No. But we call both "geniuses." In this way, future IQ tests — or whatever we call them — might resemble vocational assessments more than anything.