How 2022's Nobel Prize Winners In Physics Proved Einstein Wrong

Every now and then, news hits that "such and such has gotten a Nobel Prize. Hurray!" So Nobel Prizes are good, right? Big, prestigious something-or-other awards that confer some vague honor upon the recipient, and then: done. Folks return to their keyboards, lattes, gas bills, what-have-you, and nothing seems to change. What's the big deal, anyway?

To answer that question, let's look at past recipients on the Nobel Prize website. Martin Luther King, Jr. won the Nobel Peace Prize in 1964 "for his non-violent struggle for civil rights for the Afro-American population." Sir Alexander Fleming won the Nobel Prize in Physiology or Medicine in 1945 "for the discovery of penicillin and its curative effect in various infectious diseases." In the realms of art, science, and humanitarian work, Nobel Prizes are awarded to people "who, during the preceding year, shall have conferred the greatest benefit to humankind," as award creator Alfred Nobel said in his will in 1895. He gave away his fortune to subsequent generations, to reward those who advance and enrich the world within the domains of medicine, physics, chemistry, literature, and peace.

This year, the Nobel Prize in Physics was split between Alain Aspect, John Clauser, and Anton Zeilinger. Even if you've never heard of them, their work proved Einstein wrong, shattered our understanding of reality itself, and led directly to the quantum computing revolution. To understand how and why, we've got to do a big-brain dive into history, science, and the very nature of space and time.

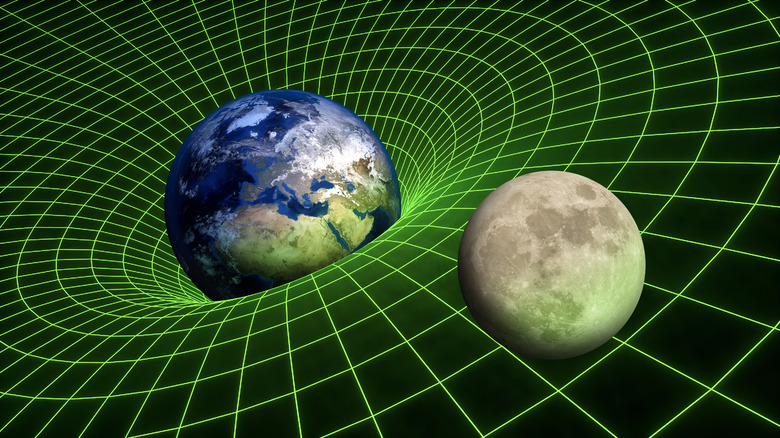

The mesh of spacetime

So what's the official reason for granting Alain Aspect, John Clauser, and Anton Zeilinger 2022's Nobel Prize in Physics? "For experiments with entangled photons, establishing the violation of Bell inequalities and pioneering quantum information science," as the Nobel Prize website says. To understand what that means, we've got to go back that most oft-cited, frizzy-haired genius of math and science: Albert Einstein.

Einstein's 1905 Theory of Special Relativity (the E = mc2 one) said that anything with mass — you, a leaf, Mars — can never move faster than the speed of light. Space and time are one bounded mesh, spacetime; moving faster through space means moving slower through time. It so happens that on Earth, folks are close enough together, and slow-moving enough, to experience time the same. Einstein's 1915 Theory of General Relativity said that gravity is like a dent in spacetime. An object like Earth makes a dent big enough to make the moon spin around it, like a ball around a drain. The sun is so massive that all of our solar system's planets spin around it, and so on (both per Live Science).

In the end, Einstein believed that space exists "locally," meaning that objects affect each other by being in direct contact with each other, per Space. To creatures like us, that should make sense. Why would a book fall over if nothing pushed it? But when objects get really, really small the rules change, and things get weird.

Spooky action at a distance

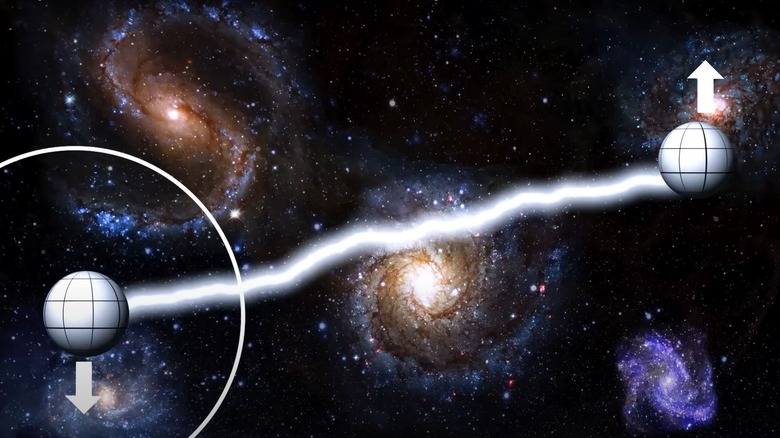

So how in the heck could a particle a galaxy away affect what happens in my coffee mug? That's impossible, surely. Nothing travels faster than light, Einstein said — things far away are in my past and will take some time to get here. For big objects, this is totally true and Einstein was right. But for objects smaller than an atom — quarks, neutrinos, bosons, electrons — it's false. At sub-atomic size, quantum mechanics — the physics of the really small — has proven that space is not "local," as The Conversation explains. Particles can affect other particles light years away, instantly. How? We'll get to that later.

Einstein thought that such "spooky action at a distance" was an absurd idea. Other physicists, like Richard Feynman, thought differently. During the 1930s at the onset of an investigation into quantum mechanics, Einstein, Boris Podolsky, and Nathan Rosen published a paper debunking non-local, quantum physics (readable on the Physics Review Journal Archive). On the other side, Richard Feynman joined up with Julian Schwinger and Shinitiro Tomonaga to say that quantum physics was real. The latter three, much like the current 2022 winners, were eventually awarded the Nobel Prize in Physics in 1965.

Feynman, like Einstein, worked in the realm of mathematics. It took until the 1960s for another physicist, John Bell, to develop the math into something testable. It took until the 1970s for 2022 Nobel Prize winner John Clauser to actually test it.

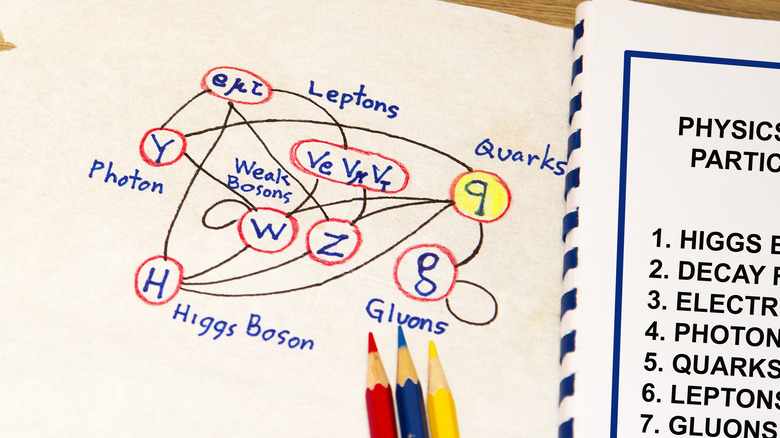

The entanglement of Bell's Theorem

To be clear, Einstein didn't doubt any researcher's results, he doubted the reasons for the results. If quantum mechanics is real and two particles can interact at any distance, instantly, he figured there must be some "hidden variables" at work — something we don't know about. Physicist John Bell, however, demonstrated that there are no hidden variables — none — that can account for quantum mechanics, as Brilliant outlines. This work, known as Bell's Theorem or Bell's Inequality, focused on sussing out patterns in particle behavior that could be tested later, as Dr. Ben Miles explains on YouTube.

As far back as 1935, physicist Erwin Schrödinger had tried to explain quantum mechanics through his now-famous Schrödinger's Cat thought experiment. In a nutshell: if a cat is in a box, is it alive or dead? Well, so long as I don't check, there's a 50/50 chance either way (barring any horrible smells).

Similarly, imagine that we want to measure a light particle (a photon), which can oscillate either vertically or horizontally like a sound wave: either, a) It's oscillating one way from the moment its created, and we don't know until we measure it (this is what Einstein thought), or, b) It obtains a characteristic the moment it's checked; before then, it's in an either/or "superposition." Believe it or not, the latter is true. But for every particle measured, a twin with the opposite measurement exists somewhere in the universe. That's quantum entanglement.

A provably weird universe

But really, you might think, how could something not have a characteristic until it's measured? Judging by everyday life, such a notion is absurd. If I drop a coin behind the couch, of course, it lands face up or face down regardless of whether or not I see it. If I check and it's face up, then that's the way the coin's been since I dropped it. And yet, as we keep learning: this is simply not the case at sizes smaller than an atom. Why? No one still has any clue, don't worry. But thanks to the work of 2022's Nobel Prize winners, we know it's true. Those winners – Alain Aspect, John Clauser, and Anton Zeilinger — are the ones who finally developed ways to test and measure quantum entanglement in real life, beyond the theoretical realm of paper and mathematics.

All three gentlemen built on the work of the previous ones, starting with Clauser and ending with Zellinger, as Nature explains. The details of their tests, described on the excellent YouTube channel PBS Spacetime, are beyond the scope of this article. Suffice it to say that each researcher closed loopholes in testing methods that might have allowed for the presence of Einstein's hidden variables. Clauser did his work in the 1970s, Aspect in the '80s, and Zellinger in 1997. As Zellinger humbly said, "This prize would not be possible without the work of more than 100 young people over the years."

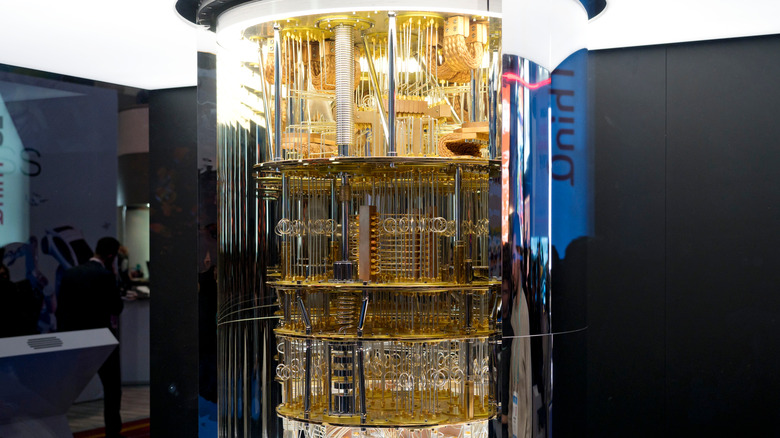

The quantum computer leap

Alright, so quantum weirdness is true. So what? you might ask. Back when John Bell wrote his hidden variable-disproving theorems in the 1960s there was no practical use for investigating the truth of quantum entanglement. David Kaiser, physicist at MIT and colleague of the Nobel Prize-winning Clauser, said of quantum physics in the '60s, "People would say, in writing, that this isn't real physics — that the topic isn't worthy," per Nature. And now? We've got quantum computers based on such supposedly once-useless theory.

And what in the world is a quantum computer? Why, it's a computer that isn't there until you log in, we glibly joke. But truthfully, explaining quantum computers would require thousands of more words. The short version is, as Quanta Magazine outlines: they're computers with greatly expanded processing power because of the same quantum mechanics that influences sub-atomic particles like electrons. Regular computers make computations based on values of 1s and 0s — binary values. In a quantum computer, quantum superpositions — the possibility for either a 1 or 0 until measured — allow for differently complicated calculations. This is useful because microchips are reaching the limit of their smallness. The smallest are 10 nanometers, smaller than a single virus, per semiconductor company ASML.

In the end, such work is possible not only because of the current Nobel Prize winners, but because of nearly 100 years of collective research. And in a very real way, we also have Einstein's skepticism to thanks.